As AI tools get more popular, the politics, infighting, and investment opportunities are shaking things up. Case in point:

FLUX is a family of open text-to-image models from the researchers behind Stable Diffusion. Why are the researchers behind Stable Diffusion not releasing a new version of Stable Diffusion? We'll get to that. But for now, suffice it to say that FLUX looks set to steal Stable Diffusion's crown asthe best open text-to-image model available. Here's what you need to know.Table of contents:

What is FLUX.1?

FLUX (or FLUX.1) is a suite of text-to-image models from

Black Forest Labs, a new company set up by some of the AI researchers behind innovations and models like VQGAN, Stable Diffusion, Latent Diffusion, and Adversarial Diffusion Distillation. The first FLUX models were only released in August 2024, but you can see why they've created such a big splash so quickly—they're seriously impressive.At present, there are four FLUX models—and I swear that these are the official stylizations of the names:

-

FLUX1.1 [pro]

-

FLUX.1 [pro]

-

FLUX.1 [dev]

-

FLUX.1 [schnell]

FLUX1.1 [pro] and FLUX.1 [pro] (why the moving point mark?) are only available as an API. They're designed as a dedicated option for enterprises and other big AI developers. While they're the best models in terms of prompt adherence and quality, they aren't the most interesting.

FLUX.1 [dev] and FLUX.1 [schnell] are

both open models. FLUX.1 [dev] is available as an API and throughHugging Face for non-commercial purposes. FLUX.1 [schnell] is released under an Apache 2.0 license—you can download it from Hugging Face and use it to do basically anything you like. It's free for commercial, non-commercial, and any other kind of use you can think of.The two (and presumably more to follow) open models in the FLUX family are what make it stand out. Most other major text-to-image models—like

DALL·E 3,Midjourney, Ideogram, and Google Imagen—are all proprietary. The only way to use them is through official APIs. While there may be some limited options to fine-tune or customize their outputs, you can't change things too much.

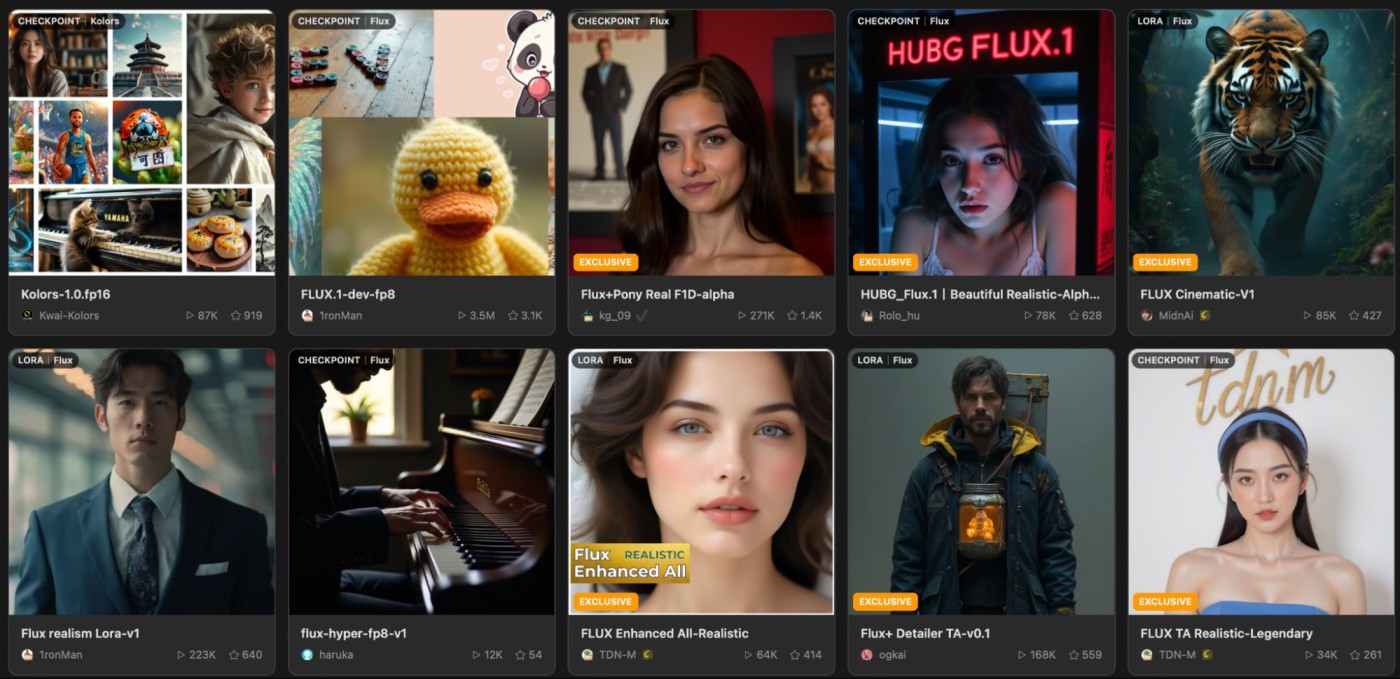

With open models like FLUX.1 [dev] and FLUX.1 [schnell], however, anyone with the technical skills to

follow an online tutorial can fine-tune a new model using a technique called low rank adaption (LoRA). There are already FLUX fine-tunes forblack light images,1970s food,XKCD-style comics, andlots more.It's this openness that made Stable Diffusion so popular, and it looks set to be the same with FLUX.

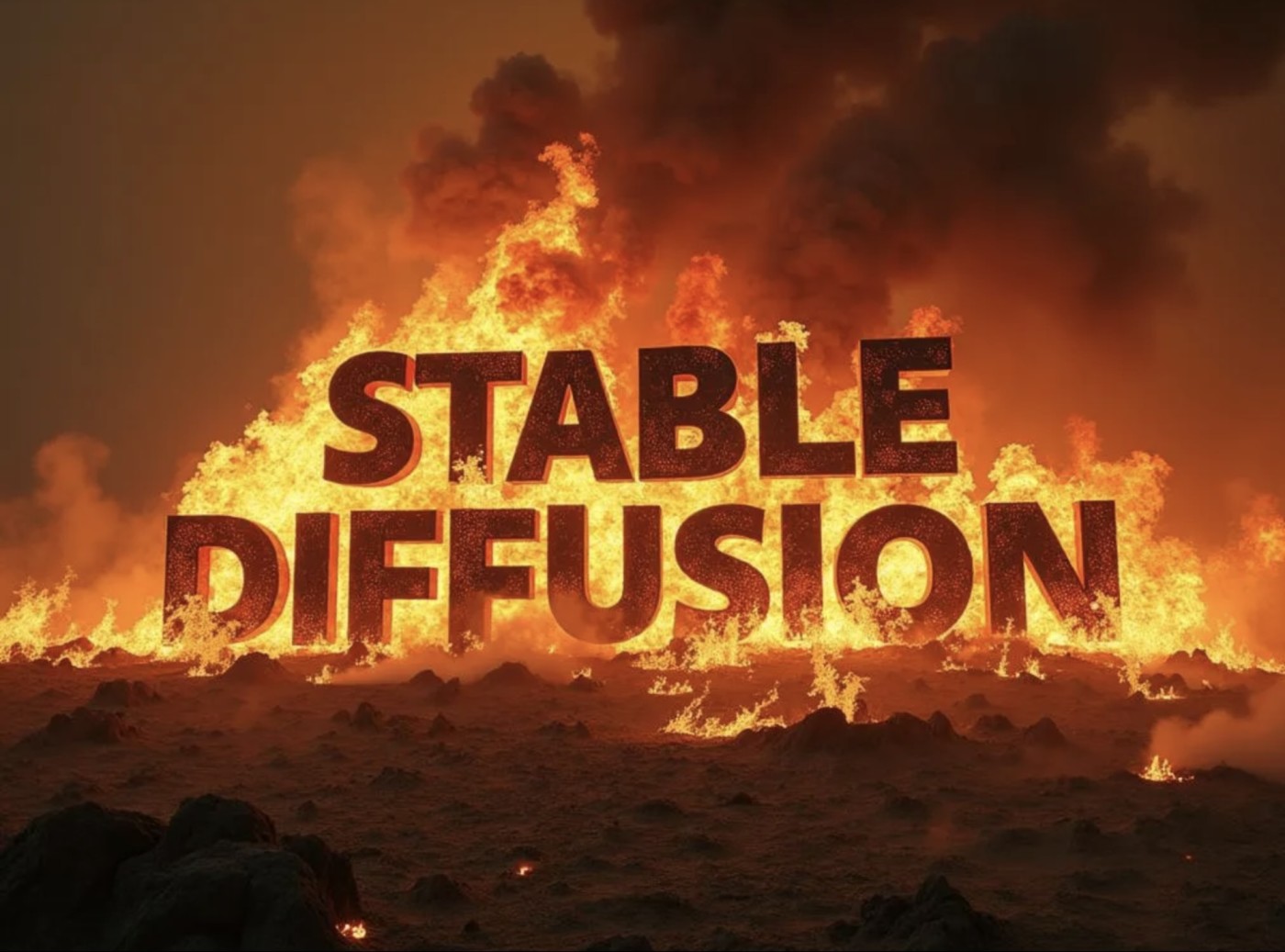

What happened to Stable Diffusion?

Stability AI, the company behind commercializing Stable Diffusion, has had a really bad year. In brief:

-

During the summer of 2023, a

string of reports detailed "chaos" and a talent exodus inside Stable Diffusion.A lot of the issues were tied to then-CEO, Emad Mostaque, who,in a very unflattering Forbes profile was accused of having "a history of exaggeration." - A cash investment from Intel in November wasn't enough to save things, and by the middle of March 2024,three key researchers had left to start Black Forest Labs.

- Mostaque resigned a few days later, and since then, Stability AI has continued to flounder.

-

After

releasing Stable Diffusion 3 with a newly restrictive license, the modelswere banned on some of the leading AI image generation platforms. These changes were rolled back, but it didn't help the reputational damage. -

There's now

a new CEO, some more investment, and a board withSean Parker and James Cameron. -

Oh, and the company is still being

sued by artists andstock photo library, Getty.

All this is to say, while Stable Diffusion is still widely used, several key researchers are now working on FLUX. Plus, Stability AI, the company that was supporting the development, is in a bit of a mess. Maybe it will get through this rough patch and come out the other side, but for now, it looks like FLUX will become the go-to open text-to-image generator.

How does FLUX work?

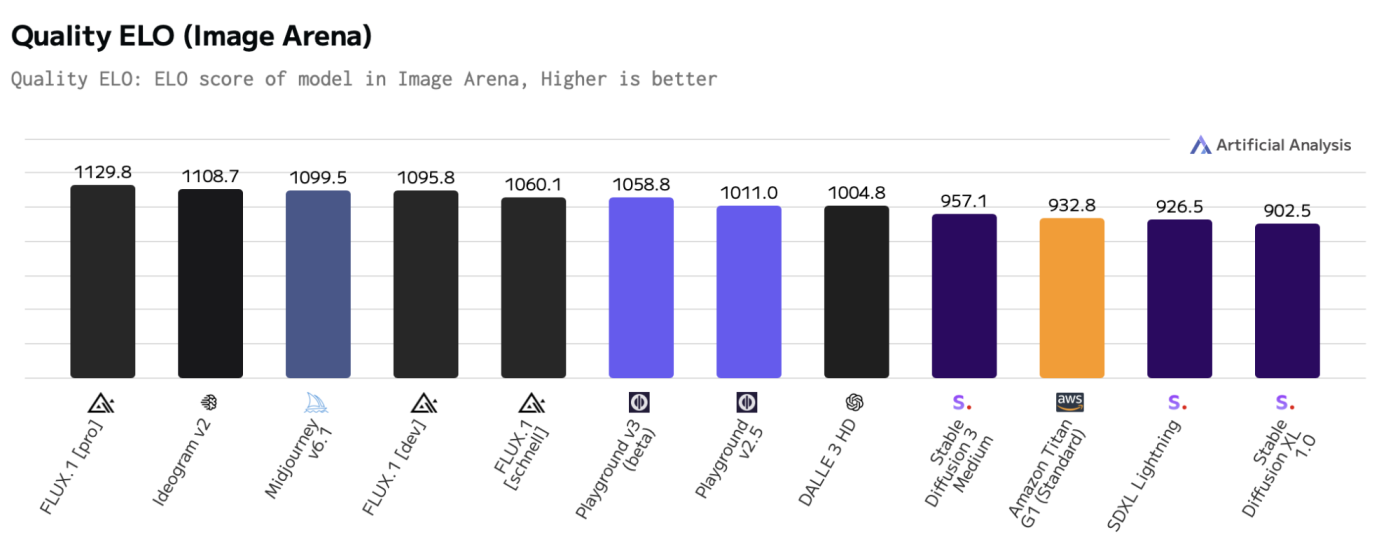

While the FLUX models

apparently rely on state-of-the-art research likeflow matching,rotary positional embeddings, andparallel attention layers, at the core, they're stilldiffusion models like Stable Diffusion, Midjourney, and DALL·E 3—though FLUX stacks upreally well against them in head-to-head testing. As I write this, the three FLUX.1 models are in the top five on Artificial Analysis.

Diffusion models start with a random field of noise and then edit it in a series of steps to attempt to fit the prompt. The metaphor I keep coming back to is looking up at a cloudy sky, finding a cloud that looks a bit like a dog, and then having the power to snap your fingers to keep gradually shifting the clouds to become increasingly dog-like.

Of course, this belies the huge complexity and massive amount of research that it has taken to get to this point, as well as the amount of training and development it has taken for FLUX to be able to accurately render complex scenes, text, and even hands.

How to use FLUX.1

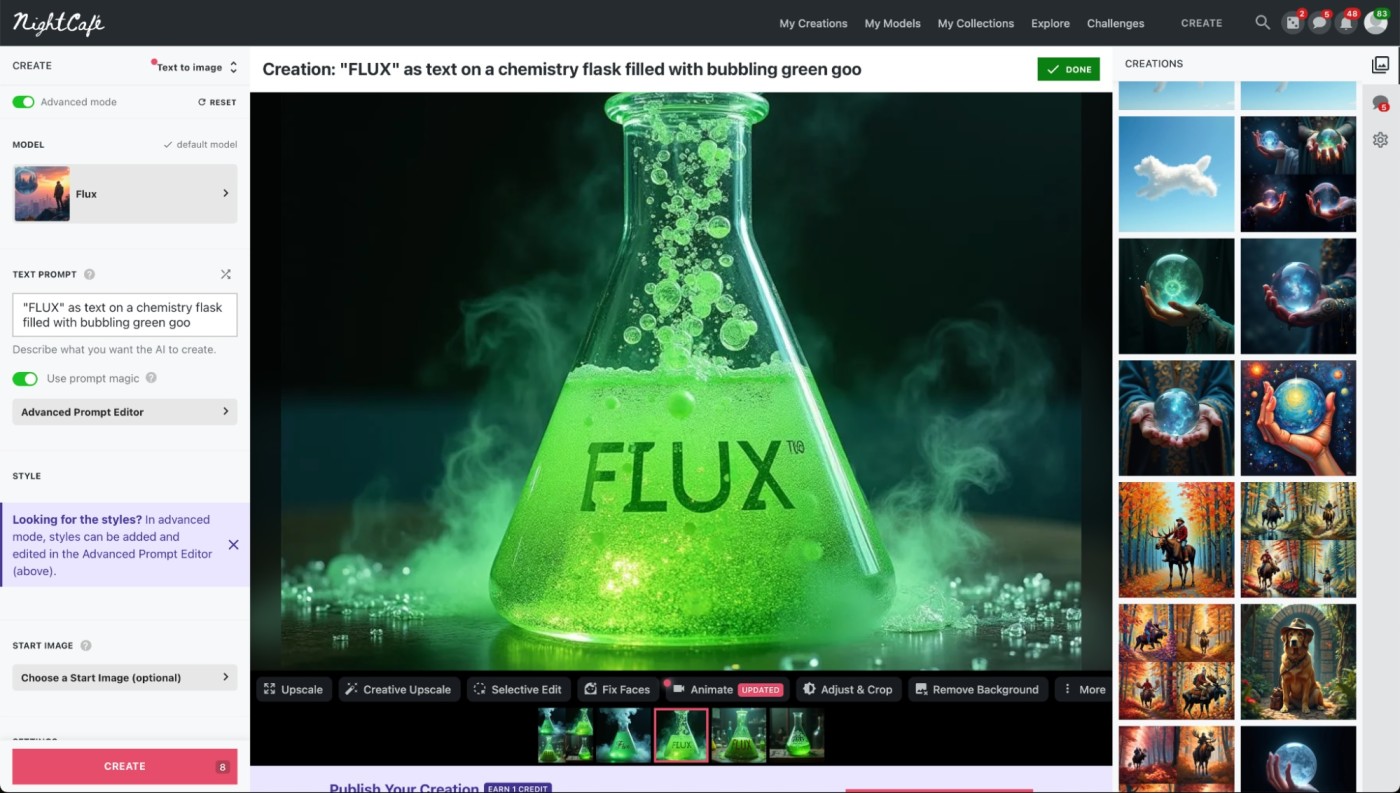

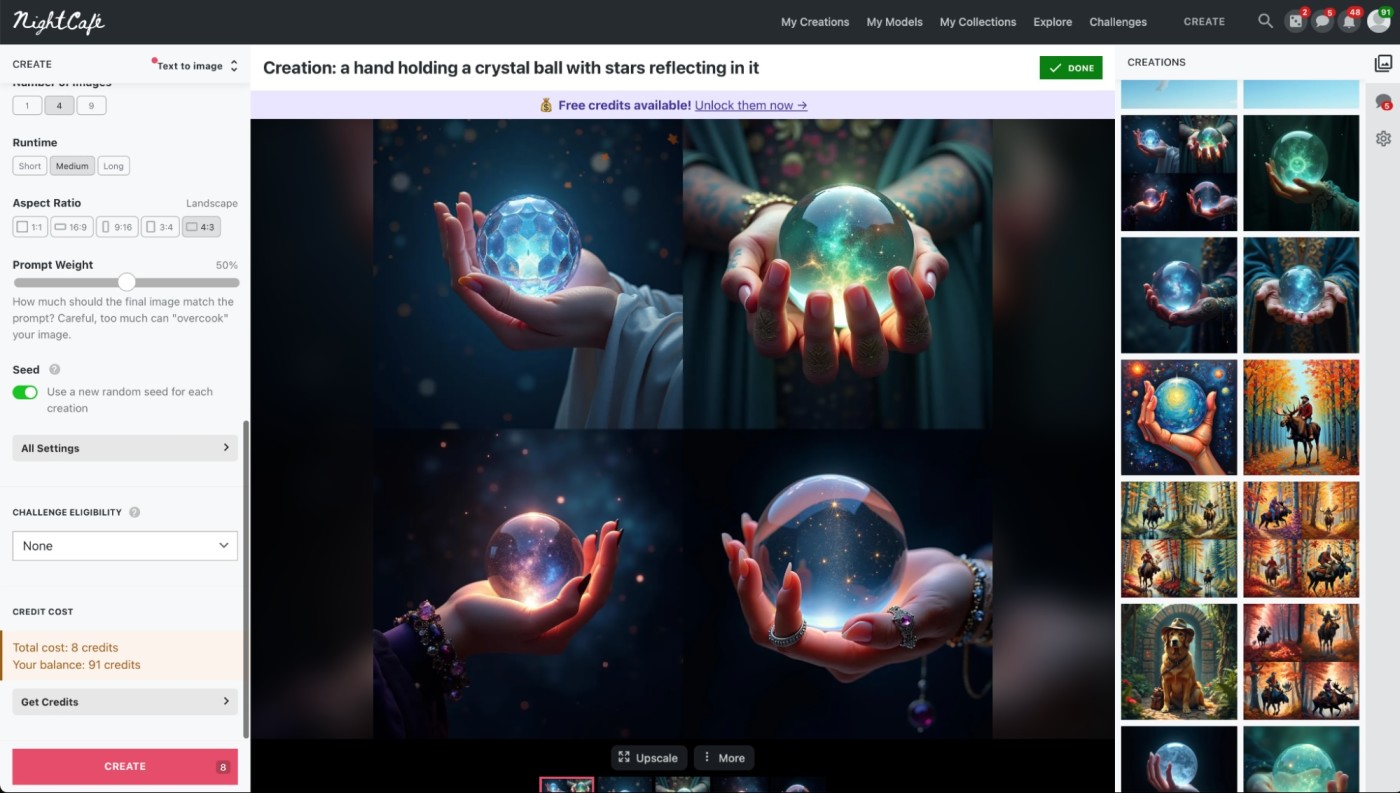

The simplest way to use FLUX is through an online art generator. I've been using

NightCafe for all the images in this article, butTensor.Art andCivitai also have FLUX as well as fine-tuned versions available. (Though be warned: these platforms aren't always safe for work.) Just sign up for a free trial, select FLUX as the model, enter a prompt, and see where you get to.If you're more technically minded, you can download FLUX.1 [dev] and FLUX.1 [schnell] from Hugging Face and get them running locally on your machine using something like

ComfyUI. This allows you touse features like in-painting. Alternatively, you can also use FLUXthrough its API.FLUX has only been available for a couple of months, but it's already been embraced by the open AI community. If this is your first time hearing about it, you can expect to see a lot more of it in the coming months.

Related reading: