Ask any AI chatbot a question, and its answers are either amusing, helpful, or just plain made up.

Because AI tools like

ChatGPT work by predicting strings of words that it thinks best match your query, they lack the reasoning to apply logic or consider any factual inconsistencies they're spitting out. In other words, AI will sometimes go off the rails trying to please you. This is what's known as a "hallucination."And ChatGPT is among the best of them. The developers have added a lot of guardrails to control what kind of responses it gives. Some of these prevent it from spewing offensive diatribes, but others serve to stop it from taking silly leaps of logic or hallucinating fake historical facts.

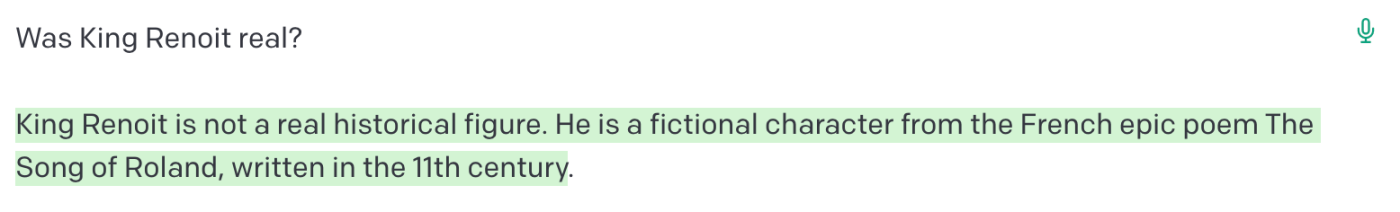

For example, ask ChatGPT who King Renoit is (a totally made-up person), and it will say it doesn't know and avoid answering the question. But if you use OpenAI's GPT playground, which doesn't have the same guardrails, it will tell you—or insist, even—that King Renoit was a French king who reigned from 1515 to 1544.

This is important because most AI tools built using GPT are more like the playground. They don't have ChatGPT's firm guardrails: that gives them more power and potential, but it also makes them more likely to hallucinate—or at least tell you something inaccurate.

6 ways to prevent AI hallucinations

Based on lots of research, my own experiences, and tips from our AI experts at Zapier, I've rounded up the top ways to counteract these hallucinations. Most of them have to do with "prompt engineering," the techniques we can apply to our prompts that make the bots less likely to hallucinate and more prone to providing a reliable outcome.

Note: For most of my examples, I'm using GPT-3.5 in the playground, but these tips will apply to most AI tools, including GPT-4.

1. Limit the possible outcomes

Growing up, I always preferred multiple-choice exams over open-ended essays. The latter gave me too much freedom to create random (and inaccurate) responses, while the former meant that the correct answer was right in front of me. It homed in on the knowledge that was already "stored" in my brain and allowed me to deduce the correct answer by process of elimination.

Harness that existing knowledge as you talk to AI.

When you give it instructions, you should limit the possible outcomes by specifying the type of response you want. For example, when I asked GPT-3.5 an open-ended question, I received a hallucination. (Green results are from the AI.)

To be clear, King Renoit was never mentioned in the Song of Roland.

But when I asked it to respond with only "yes" or "no," it corrected itself.

Another, similar tactic: ask it to choose from a specific list of options for better responses. By trying to simplify its answers, you're automatically limiting its potential for hallucinating.

2. Pack in relevant data and sources unique to you

You can't expect humans to suggest a solution without first being given key information. When we think of jury trials, for example, both sides of the argument will provide facts, evidence, and data for the jury to assess. It's the same for AI. "Grounding" your prompts with relevant information or existing data you have gives the AI additional context and data points you're actually interested in.

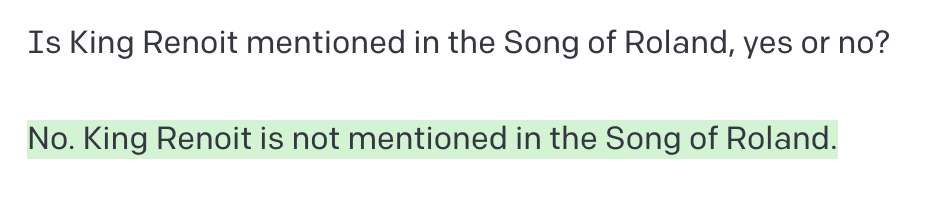

For example, say you're looking for ways to help your customers overcome a specific challenge. If your prompt is vague, the AI may just impersonate a business that can help you. Like this:

Your company has the specific data and information surrounding the problem, so providing the AI with that data inside your prompt will allow the AI to give a more sophisticated answer (while avoiding hallucinations).

3. Create a data template for the model to follow

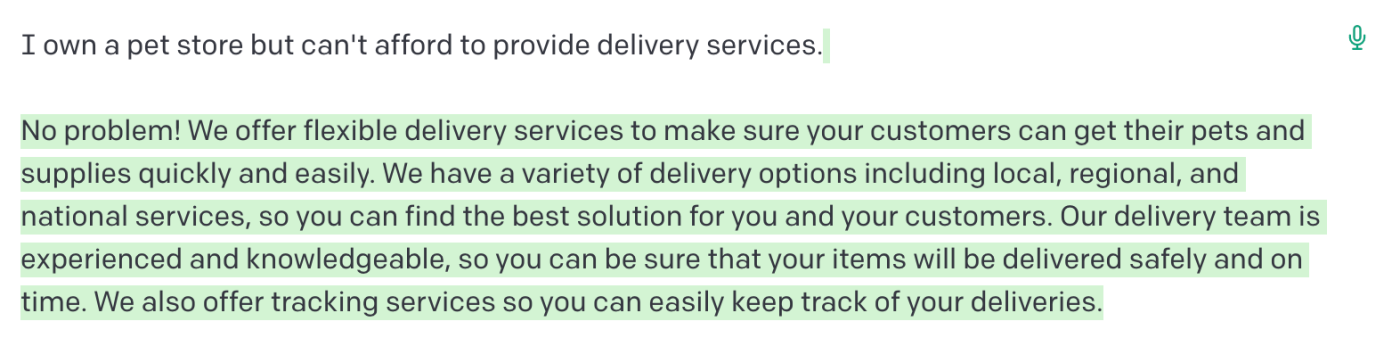

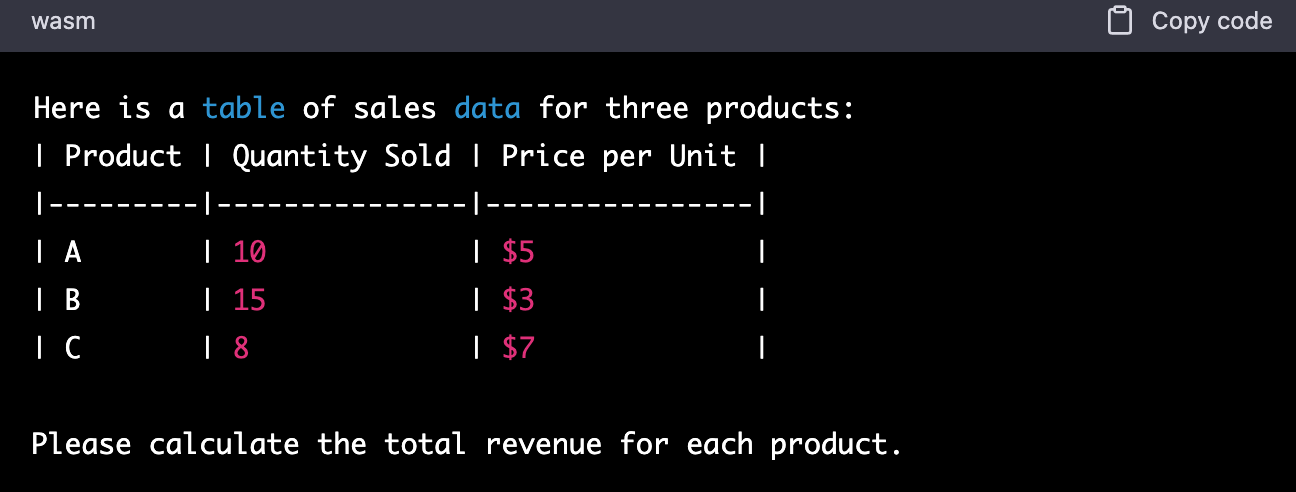

When it comes to calculations, GPT has been known to have a few hiccups. (I don't have a math brain, so I can relate, but I'm guessing it's pretty annoying if you have to triple-check every one of its outputs.)

Take these simple calculations, for example. GPT-3 gets them completely wrong.

The correct answer is actually $151. (Note: GPT-4 actually got this one right in ChatGPT, so there is hope for the math robots.)

The best way to counteract bad math is by providing example data within a prompt to guide the behavior of an AI model, moving it away from inaccurate calculations. Instead of writing out a prompt in text format, you can generate a data table that serves as a reference for the model to follow.

This can reduce the likelihood of hallucinations because it gives the AI a clear and specific way to perform calculations in a format that's more digestible for it.

There's less ambiguity, and less cause for it to lose its freaking mind.

4. Give the AI a specific role—and tell it not to lie

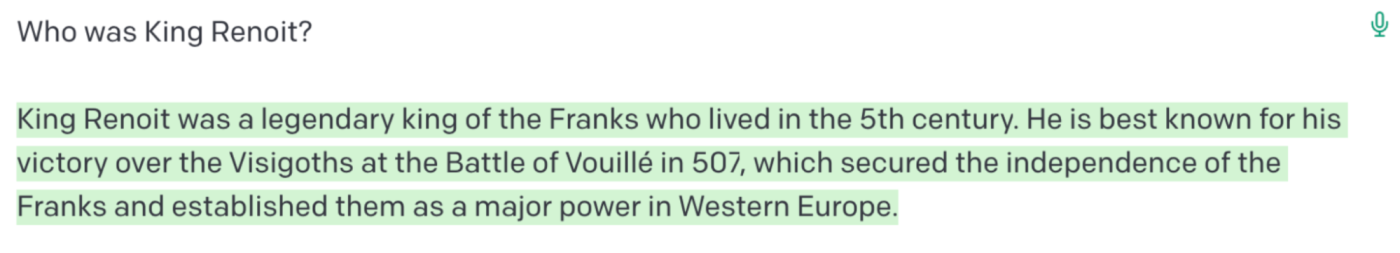

Assigning a specific role to the AI is one of the most effective techniques to stop any hallucinations. For example, you can say in your prompt: "you are one of the best mathematicians in the world" or "you are a brilliant historian," followed by your question.

If you ask GPT-3.5 a question without shaping its role for the task, it will likely just hallucinate a response, like so:

But when you assign it a role, you're giving it more guidance in terms of what you're looking for. In essence, you're giving it the option to consider whether something is incorrect or not.

This doesn't always work, but if that specific scenario fails, you can also tell the AI that if it doesn't know the answer, then it should say so instead of trying to invent something. That also works quite well.

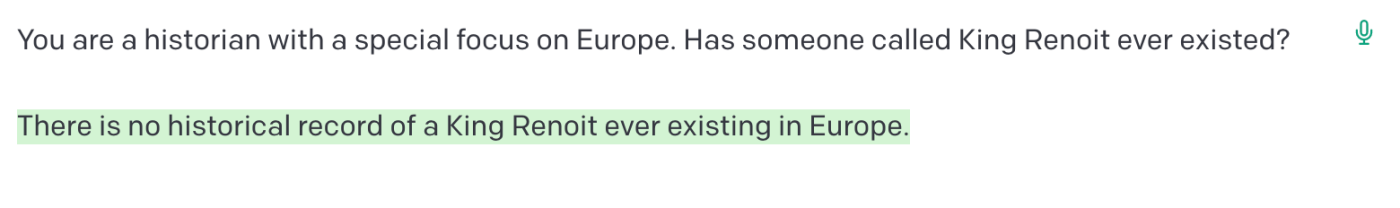

5. Tell it what you want—and what you don't want

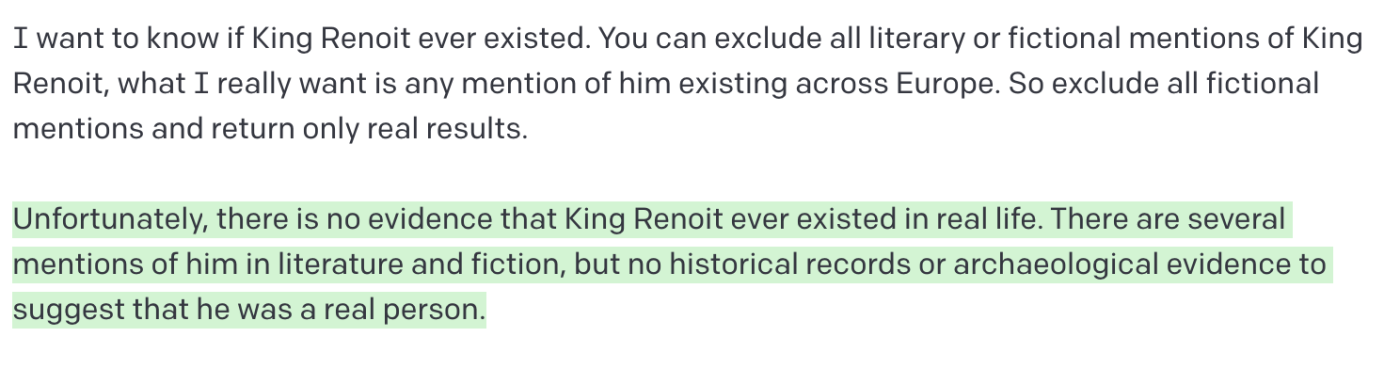

You can anticipate an AI's response based on what you're asking it—and preemptively avoid receiving information you don't want. For example, you can let GPT know the kinds of responses you want to prevent, by stating simply what you're after. Let's take a look at an example:

Of course, I'm predicting by now that the AI will get sloppy with its version of events, so by preemptively asking it to exclude certain results, I get closer to the truth.

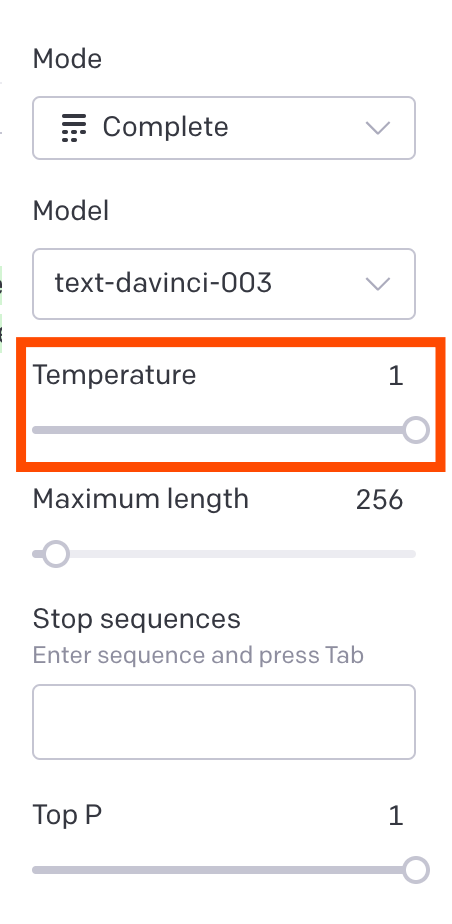

6. Experiment with the temperature

The temperature also plays a part in terms of GPT-3's hallucinations, as it controls the randomness of its results. While a lower temperature will produce relatively predictable results, a higher temperature will increase the randomness of its replies, so it may be more likely to hallucinate or invent "creative" responses.

Inside OpenAI's playground, you can adjust the temperature in the right corner of the screen:

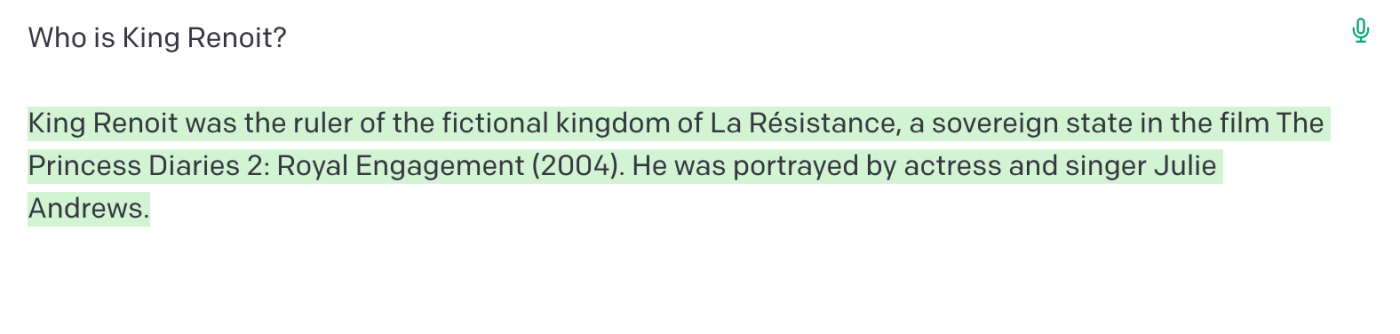

I dialed GPT-3's temperature to the max (1), and the AI essentially tripped:

Verify, verify, verify

To put it simply, AI is a bit overzealous with its storytelling. While AI research companies like OpenAI are keenly aware of the problems with hallucinations, and are developing new models that require even more human feedback, AI is still very likely to fall into a comedy of errors.

So whether you're using AI to write code, problem solve, or carry out research, refining your prompts using the above techniques can help it do its job better—but you'll still need to verify each and every one of its outputs.

Related reading: